The end-to-end platform

to optimize and scale

LLM applications

Scale GenAI with full control—no compromises.

Scaling GenAI with traditional DevOps leads to API complexity, unpredictable outputs and slow releases.

OptiGen streamlines the AI lifecycle by integrating data management, prompt engineering, and evaluation into a unified workflow.

With observability, RAG, and optimized deployment, teams can fine-tune models, automate processes, and move AI to production with ease.

End-to-end tooling

to scale LLM apps

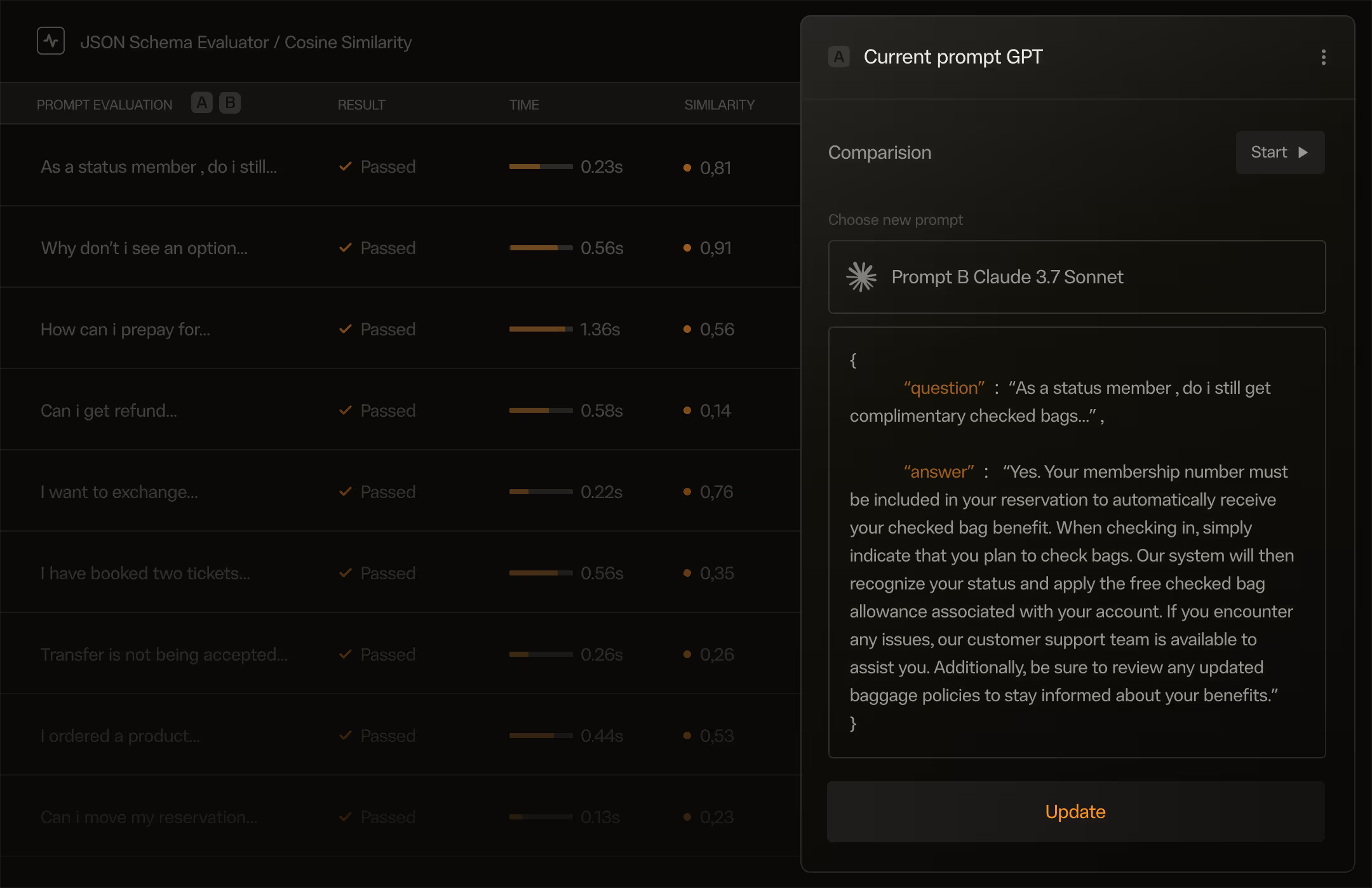

Evaluator Library

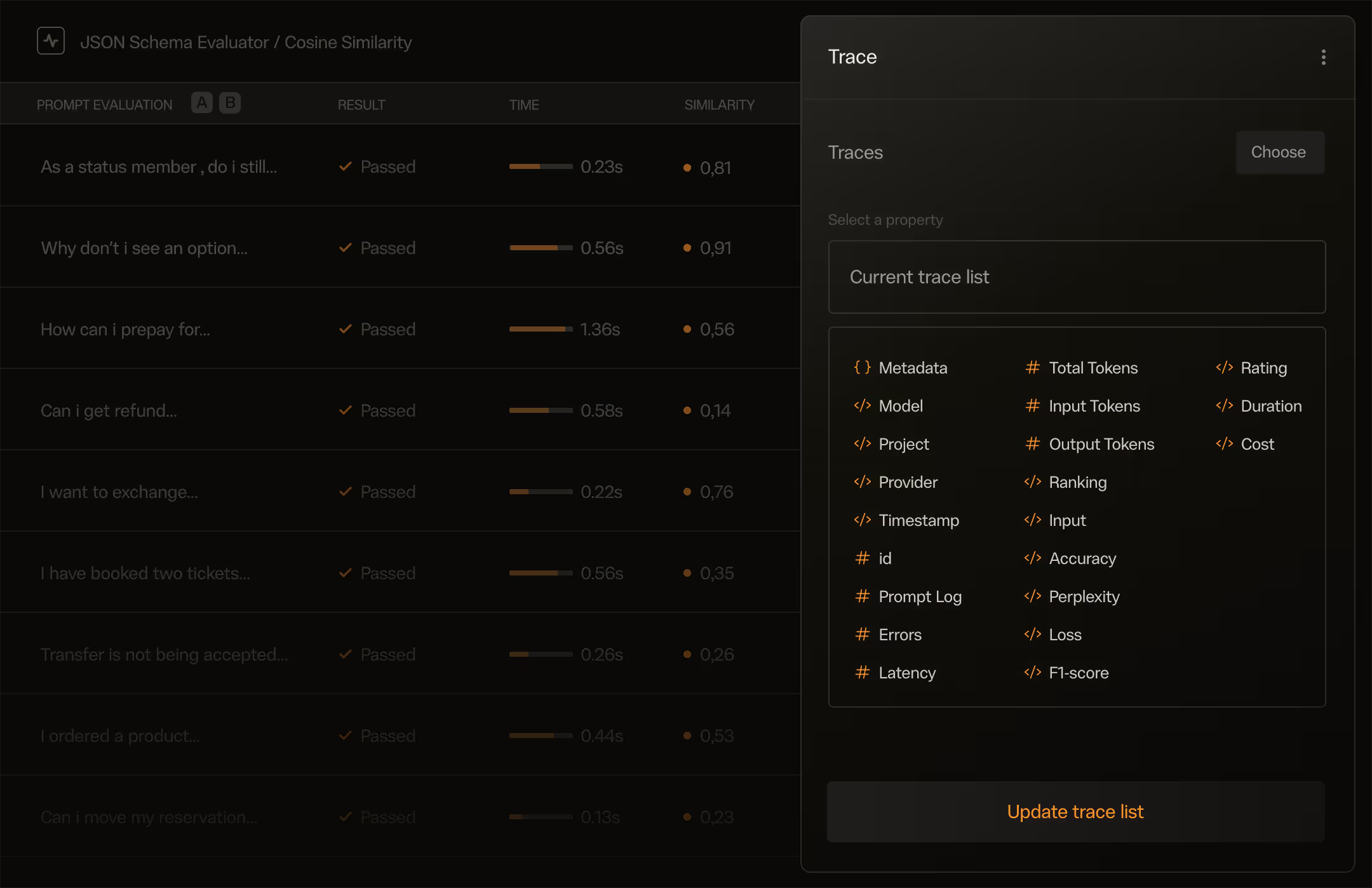

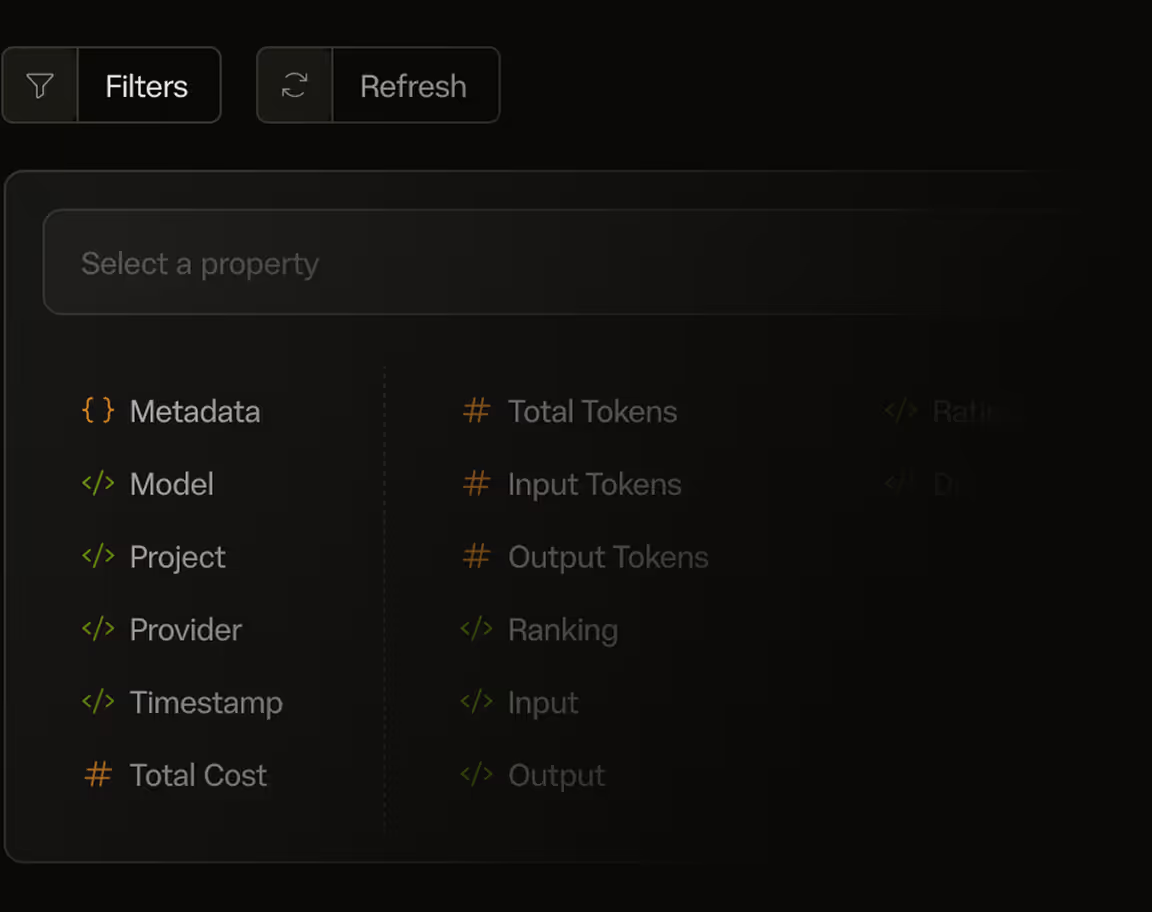

LLM Observability

Prompt Engineering

Experimentation

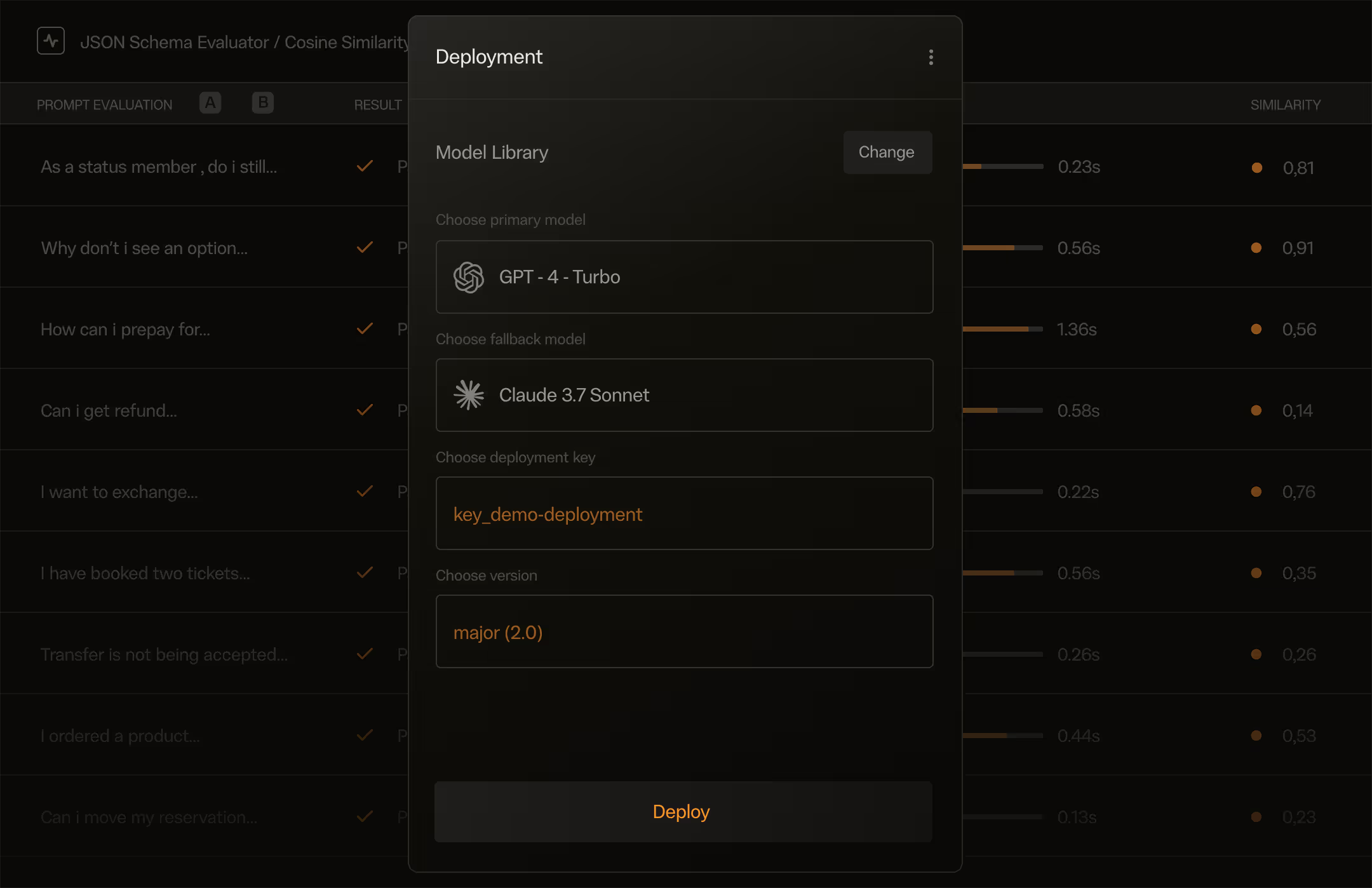

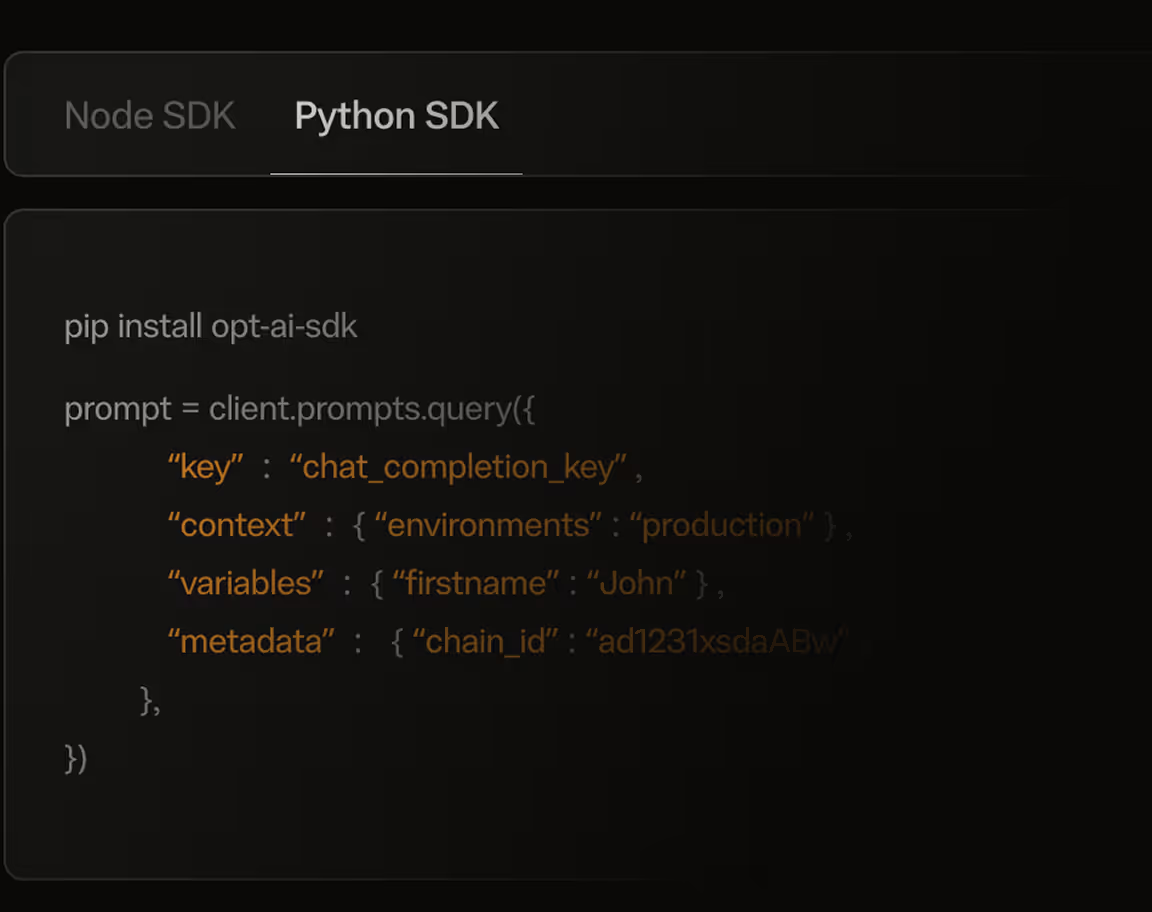

AI Gateway

Access 150+ AI Models

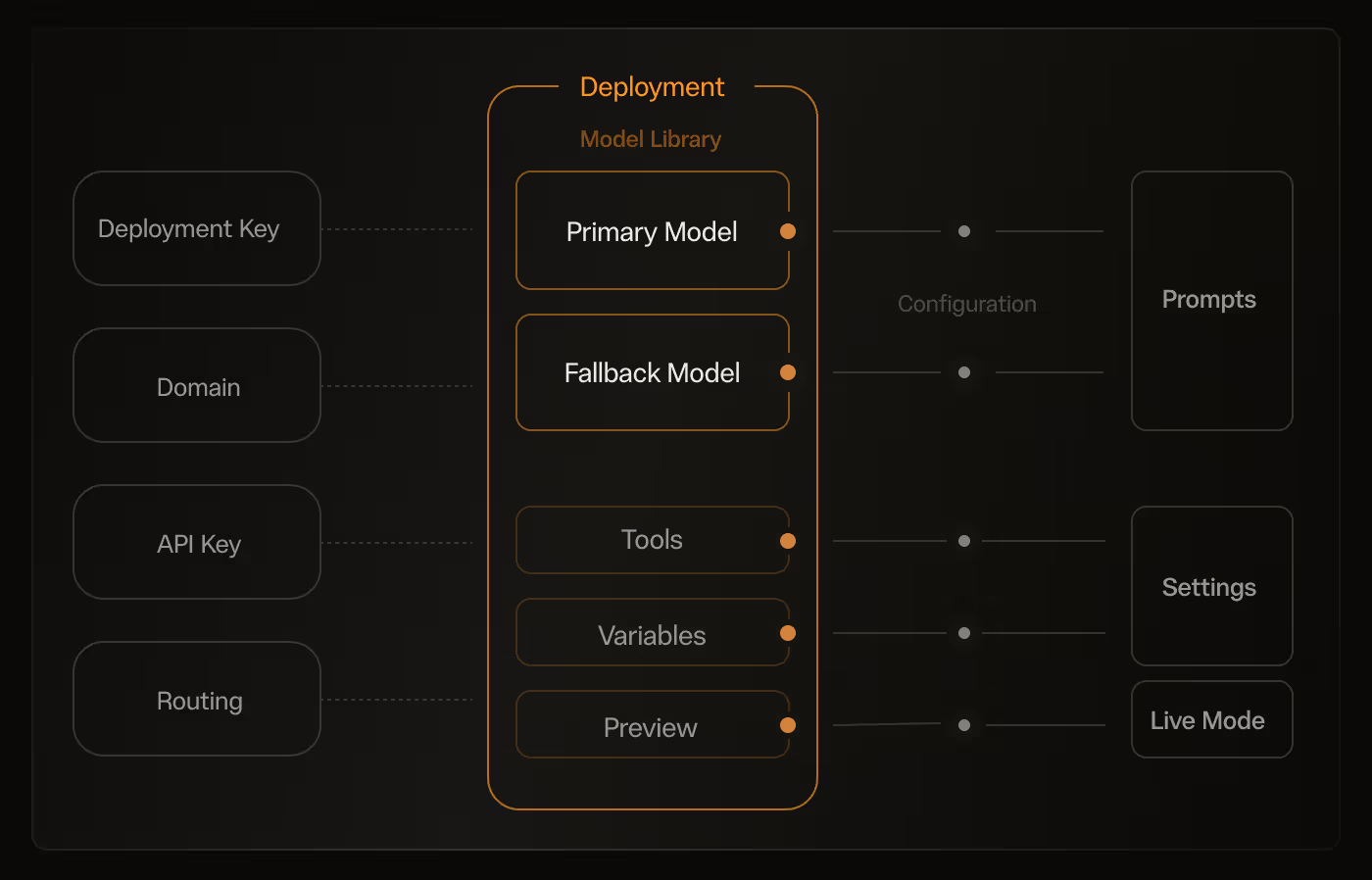

Deployments

Trace and debug complex pipelines

Monitor LLM app performance

Why teams

choose Optigen?

Use Optigen

with OpenAi

"Optigen transformed our AI workflow, cutting deployment time in half. Before, we struggled with scattered tools and slow iterations. Now, our entire AI lifecycle is managed in one place, from testing to production. The streamlined approach has improved team collaboration and allowed us to push reliable LLM-based products to market faster than ever."

"Scaling AI solutions used to be a challenge, requiring technical expertise. With Optigen’s intuitive UI, even non-technical stakeholders can contribute effectively, enabling cross-team collaboration. Engineers can focus on optimization while product managers and analysts stay involved without friction. This has accelerated our AI development."

"As an enterprise handling sensitive data, security is our top priority. Optigen provides control over PII while allowing us to optimize AI performance at scale. Security features ensure compliance with industry regulations, while the flexible deployment let us integrate AI solutions. It’s the best tool we’ve found for secure, scalable AI development."